Shader Pipeline

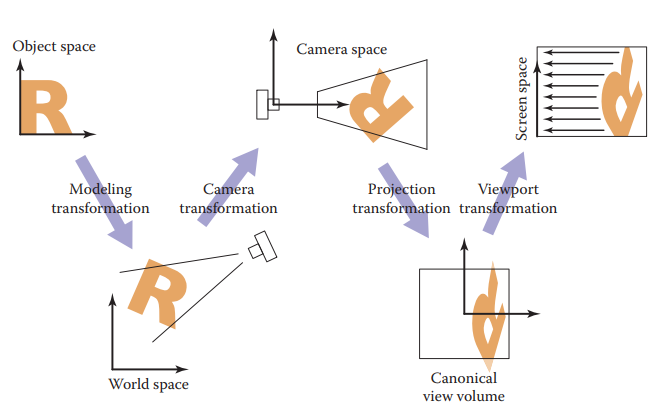

Viewing Transformations

The viewing transformations inputs a canonical coordinate \((x, y, z)\) to some \((x, y)\) on the 2D image space. One common system is a sequence of 3 transformations - camera transformation \((x, y, z)\rightarrow (x_c, y_c, z_c)\) given \(eye\) and orientation \(u, v, w\) - projection transformation \((x_c, y_c, z_c) \rightarrow (x_v, y_v)\), \(x_v, y_v\in [-1, 1]\) all the points that are visible in the camera space given the type of projection desired - viewport transformation \((x_v, y_v)\rightarrow (I_x, I_y)\) maps the unit image rectangle to desired rectangle in pixel coordinates

Perspective Projections

Shader Pipeline (OpenGL)

Vertex specification

Set up Vertex Array Object (VAO), which contains one or more Vertex BUffer Objects (VBO), each VBO stores some information about each vertex. For example, if we load a .obj file, then VAO (the object) may end up having several VBOs, for example, one VBO stores vertex positions, one VBO stores vertex colors, and another one stores vertex normals.

Vertex shader

Performs operation on every vertex, doing all the homogeneous transformations, i.e.

- \(M\): modeling transformation: to move object into world space, doing all the translations, rotations, scaling, etc.

- \(V\) viewing transformation/camera transformation: transforms from world coordinates to camera coordinates.

- \(P\) perspective projection matrix, so that we only consider vertex in the window space (visible within the camera), and normalize \((x, y, z)\) by \(w\).

Tessellation

patches of vertex data are subdivided into smaller Primitives. Tessellation control shader (TCS) determines how much tessellation to do and tessellation evaluation shader (TES) takes the tessellated patch and computes vertex values for each generated vertex.

For example Catmull–Clark subdivision can be a TCS/TES algorithm.

Rasterization

Given the tessellated primitives, filled in the primitive with pixels.

Fragment shader

Given a sample-sized segment of a rasterized Primitive, fragment shader computes a set of colors and a single depth value. In our cases, it will be pixel-wise coloring.

Note that the shader pipeline is only for OpenGL. In other frameworks (WebGL, DirectX, etc.) the abstractions are different. For example, WebGL does not have support for tessellation and you have to do it in js (likely on CPU).

Value Noise and Procedural Patterns

Other than a texture mapping, we can also generate patterns, i.e. procedural patterns. For example, if we want to make a ocean texture, we can generate some waves mesh and color it by some algorithm, instead of map a 2D image onto it.

Noise

Note that in reality, lots of patterns need some sort of "randomness", s.t. the volume of a cloud on the sky, the waves of the water, etc.

Properties of Ideal Noise

- pseudo random Given the same input, it should always return the same value.

- dimension The noise function is some \(N:\mathbb R^d\rightarrow \mathbb R\), which is a \(d\)-dim noise function.

- band limited One of the frequencies dominates all others.

- continuity / differentiability We want the change in local is small, but change in global is large

Perlin Noise

Perlin noise is a example of value noise, it's pseudo random, and continuous, and good in producing marble like surfaces.

Algorithm

Grid Definition

Define an n-dim grid where each point has a random n-dim unit-length gradient vector.

Dot product

Assume \(3D\) case and each box grid has side length 1. For query position \((x, y, z)\), it is located in some \(d\)-dim grid formed by \(2^3\) grid points, \((\lfloor x\rfloor, \lceil x\rceil)\times (\lfloor y\rfloor, \lceil y\rceil)\times (\lfloor z\rfloor, \lceil z\rceil)\). Generate \(2^d\) dotGridGradient by dot product the offset to each grid point and gradient at that grid point.

Interpolation

Note that we have \(2^3\) scale values, and we will have a trilinear interpolation so that we can get the value at that point.

Note that we take a smooth step \(s:\mathbb R^d\rightarrow \mathbb R^d\) as the coefficient of interpolation. \(s\) must have the property \(s(0) = s(1) = s'(0) = s'(1) = 0\), one good smooth step function is

Improved Perlin Noise

Note that \(s''(t) = 6 - 12t\) is not continuous, if the derivative of the function used to compute the normal of the displaced mesh is not continuous then it will introduce a discontinuity in these normals wherever \(x=0, 1\), so that we use improved smooth step

Also, note that when random directions (gradient direction) is close to standard direction \(e_i\), the noise function have very high values \(\sim1\) causing a "splotchy appearance", so instead of using random directions, we use directions randomly chosen from 12 directions \((\pm 1,\pm 1,0), (\pm 1, 0, \pm 1), (0, \pm 1, \pm 1)\)

Bump Mapping and Normal Mapping

The real surface is often rough and bumpy, we use bump mapping algorithm to achieve the same effect.

where \(p\) is the original position, \(\hat n\) is the normal and \(h\) is the bump height function.

Then, note that we have to calculate a new normal for the bumped point as

where \(T, B\) are the tangent and bitangent vector where

Note that bump mapping does not actually change the vertex position, it is used to obtain the normal mapping so that we can apply the coloring and make the surface looks "bumpy"

Shader Demo

A demo of shaders, noise, and bump mapping