Polynomial Fitting

Goal

Since an image is a discrete mapping of pixels, while the real world is "continuous", we want to fit the pixels by a continuous functions so that we can extract information.

Sliding Window Algorithm

- Define a "pixel window" centered at pixel \((x, y)\) so that an 1D patch can be \((0, y)\sim (2x,y)\)

- Fit a n-degree polynomial to the intensities (commonly \(n \leq 2\))

- Assign the poly's derivatives at \(x=0\) to pixel at windows's center

- Slide window one pixel over until window reaches border

Taylor-series Approximation of Images

Consider a 1D patch from \((0,y)\) to \((2x,y)\)

Consider the Taylor expansion of \(I\) centered at \(0\)

Thus, we can write it as

Note that we have \(2x+1\) pixels in the patch, ranges from \(-x\) to \(x\), let their intensities be \(I_x\). So that we have

where \(I, X\) are known and we want to solve \(d\)

Since this is not always have a solution. We want to minimize the "fit error" \(\|I-Xd\|^2 = \sqrt{\sum^n I_i^2}\)

When \(n = 0\), obviously \(d\) is minimized at mean, i.e. \(\sum^{2x+1} I_i / (2x+1)\)

Weighted least square estimation

If we want to extract more information, or in favor of, the neighboring points, we can have \(I' = \Omega I\) where \(\Omega\) is the weight function.

In 1-D case, let \(\Omega = diag(\omega_1, ..., \omega_{2x+1})\), we want to solve \(\Omega I = \Omega X d\) which is to minimize \(\|\Omega(I-Xd)\|^2\)

Random Sample Consensus (RANSAC)

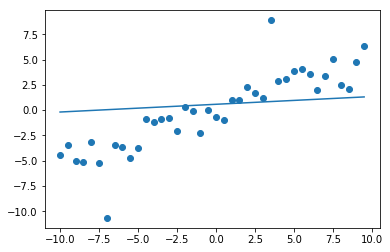

Suppose we have some outliers, then the line fitting with least square is not a good solution.

x = np.arange(-10, 10, 0.5)

y = x * 0.5 + np.random.normal(0, 1, x.shape)

y += (np.random.randn(y.shape[0]) < -1.5) * 8

y -= (np.random.randn(y.shape[0]) < -1.5) * 8

fit = np.polyfit(x, y, 1)

f = fit[0] + fit[1] * x

plt.scatter(x, y)

plt.plot(x, f);

Algorithm

Given:

\(n=\) degree of freedom (unkonwn polynomial coefficients)

\(p=\) fraction of inliers

$t = $ fit threshold

$P_s = $ success probability

\(K=\) max number of iterations

for _ in K:

randomly choose n + 1 pixels

fit a n-degree polynomial

count pixels whose vertical distance from poly is < t

if there are >= (2w+1) * p pixels, mark them as inliers:

do n-degree poly fitting on inliers

return the fitting

# if there is insufficient inliers

return None

Consider the value for \(K\):

Suppose inliers are independent distributed, hence

2D Image Patch

Given an image patch \(I(1:W, 1:H), I\in[0,1]\) is the intensity mapping

2D Taylor Series Expansion

Let \(I(0,0)\) be the center

Directional Derivative

Using 1st order Taylor series approximation.

Let \(\theta\) be the direction, \(v=(\cos \theta, \sin\theta)\) be the unit vector in direction \(\theta\).

Consider the points \((t\cos\theta, t\sin\theta)\), i.e. \(t\) units away from \((0,0)\) in the direction of \(\theta\)

Then consider the directional derivative

Let \(\nabla I = (\partial_xI, \partial_yI)\). Note that

Therefore, the gradient \(\nabla I\) tells the direction and magnitude of max changes in intensity.

Discrete Derivative

Noting that the image are pixels, hence we can approximate the derivative by its limit definition.

Or we can use forward difference \((x+1, x)\) or backward difference \((x,x-1)\) vs. \((x-1,x+1)/2\)

Magnitude \(\|\nabla I(x,y)\| = \sqrt{\partial_xI(x,y)^2 + \partial_yI(x,y)^2}\) is the \(L_2\) norm

Direction \(\Theta(x,y) = \arctan(\partial_xI / \partial_yI)\)

Laplacian

Based on central difference, the approximation to the laplacian of \(I\) is

For 2D functions,

Hence