Image Features - Harris Corner Detector

import cv2

import numpy as np

from matplotlib import pyplot as plt

import seaborn as sns

from scipy.signal import convolve2d

def imshow(img, ax=None, title="", bgr=True):

# since plt and cv2 have different RGB sorting

if bgr:

img = cv2.cvtColor(img,cv2.COLOR_BGR2RGB)

if ax == None:

plt.imshow(img.astype(np.uint8))

plt.axis("off")

plt.title(title)

else:

ax.imshow(img.astype(np.uint8))

ax.set_axis_off()

ax.set_title(title)

plt.rcParams["figure.figsize"] = (12,6)

Image Stitching

When matching to images, and even to merge into one.

Detection identify the interest points

Description extract feature vector around each interest point

Matching determine correspondence between descriptors in two views

The goal is to detect the same points in both images, the number of points must be generated in each image independently, and must have a sufficient number for comparison, while at the same time not too many, which makes the algorithm slow.

What are good characteristics for a interest point

Must have somewhat unique texture in the local sense

Large contrast changes, i.e. gradients

Edge is less useful since line segments can be localized on any place with the same orientation (aperture problem)

Therefore, we need at least two different orientations, which can be reliably matched

Corner Detection

"Corner like" patch can be reliably matched

We should easily recognize the corner "point" by looking through a small window, where there should have a large change in intensity in all directions.

Weighed summed square difference

where \(w: \mathbb N^2 \rightarrow \mathbb R\) is the weighted function, for example indicator \(\mathbb I((x,y) \text{ in window})\), or 2-D Gaussian.

Them, fit \(I(x+u, y+v)\) by a first-order Taylor series expansion about \(x,y\):

Then, we can approximate \(I\) by a series of polynomials, so that we can plugging in for \(E_{WSSD}\)

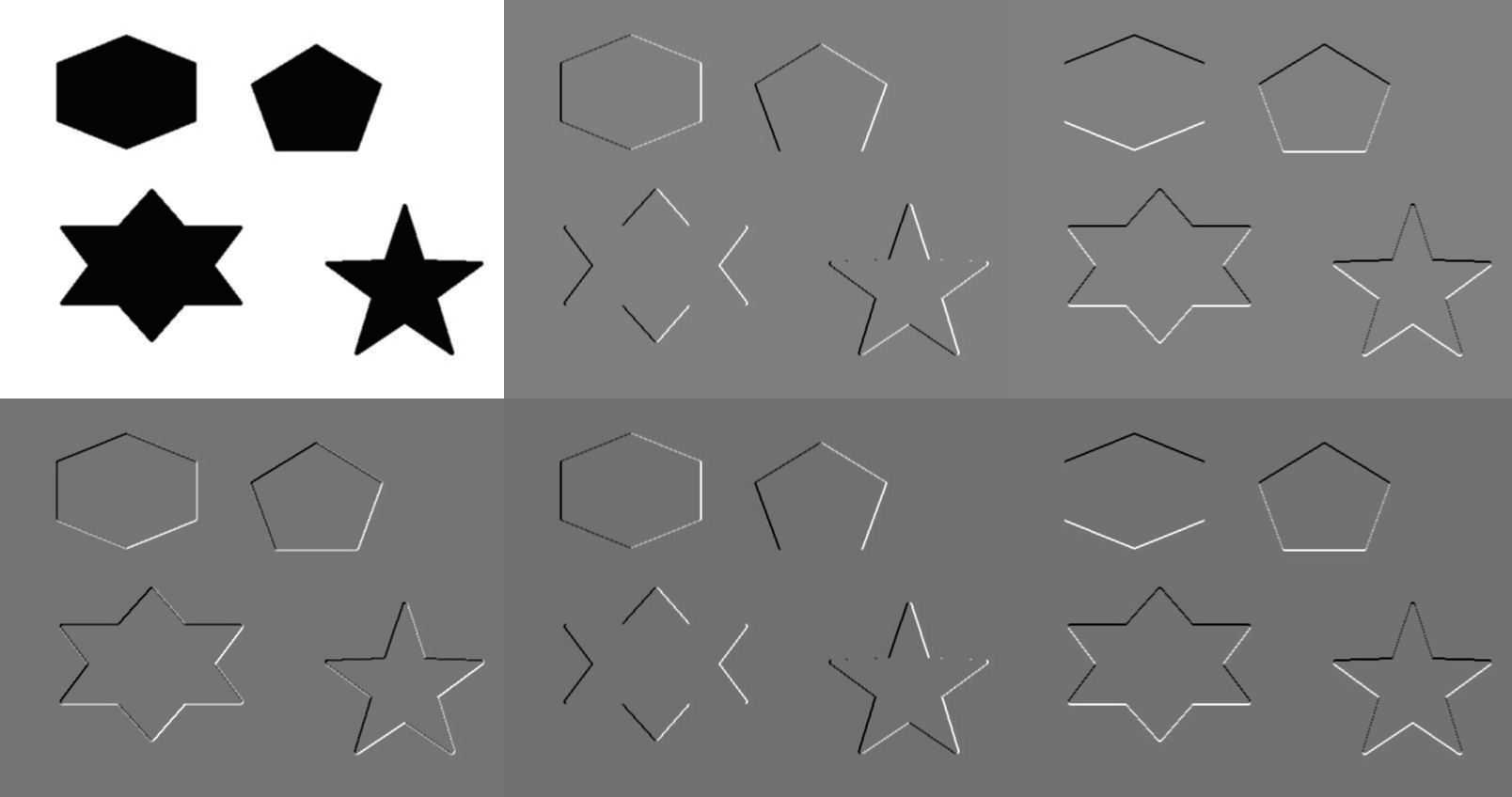

The images are \(I, I_x, I_y, I_{x}I_y, I_{x}I_x, I_{y}I_y\)

Note that \(M\) is independent of \(u,v\).

Consider using an indicator weight function, then \(M = \begin{bmatrix}\sum_{x,y} I_x^2 &\sum_{x,y} I_x\cdot I_y\\\sum_{x,y} I_x\cdot I_y &\sum_{x,y} I_y^2\end{bmatrix}\) defines the shape. Generally, \(E_{WSSD}(u,v)\) will be a ellipse

Note that \(M\) is symmetric \(2\times 2\) matrix and is diagonalizable, i.e. \(M = V diag(\lambda_1, \lambda_2), V^{-1}\), and \(\lambda_{\min}^{-1/2}, \lambda_{max}^{-1/2} \propto\) length of the radii of the ellipse and indicate the direction of of slowest change and fastest change, respectively.

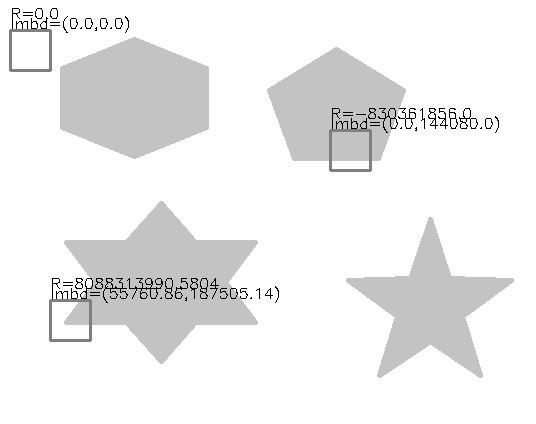

Then, a perpendicular corner will have large lambdas and \(\lambda_1 \approx \lambda_2\), for edges, \(\lambda_a >> \lambda_b\), for "flat" region, lambdas are small.

Harris Corner Detector

Given such characteristics of lambda, we can propose a rotationally invariant criteria

Where \(\alpha \in (0.04, 0.06)\) is a constant coefficient

\(R<0\) then edge

\(R > 0\) then corner

\(|R| < \epsilon\) for some small threshold then flat region

General Procedure

- compute vertical and horizontal gradients \(I_x,I_y\)

- compute \(I_x^2, I_y^2, I_x\cdot I_y\)

- compute Gaussian weighted \(M\) for each pixel

- compute \(R = det(M) - \alpha trace(M)^2\) for each image window, as corner score

- Find points above threshold \(R > S\)

- Non-maximum suppression to keep only the local maxima

Properties

- Rotation and Shift invariant (for Harris corner detector)

- NOT scale invariant (if an image is enlarged, then we cannot find the corner with the same window size)

Source code

import cv2

import numpy as np

import plotly.express as px

img = cv2.imread("../assets/Corners.jpg", cv2.IMREAD_GRAYSCALE).astype(float)

img_blurred = cv2.GaussianBlur(img, (3, 3), 1)

def to_image(data):

return (255 * (data - data.min()) / (data.max() - data.min())).astype(np.uint8)

F_dx = np.array([[-1, 1]])

F_dy = F_dx.T

img_dx = cv2.filter2D(img_blurred, -1, F_dx, delta=122)

img_dy = cv2.filter2D(img_blurred, -1, F_dy, delta=122)

output = np.vstack([

np.hstack([to_image(img_blurred), to_image(img_dx), to_image(img_dy)]),

np.hstack([to_image(img_dx * img_dy), to_image(img_dx * img_dx), to_image(img_dy * img_dy)]),

])

cv2.imwrite("../assets/harris_corner_1.jpg", output)

patch = img[300: 350, 50: 100]

I_x = cv2.filter2D(patch, -1, np.array([[-1, 1]]))

I_y = cv2.filter2D(patch, -1, np.array([[-1], [1]]))

M = np.array([[np.sum(I_x ** 2), np.sum(I_x * I_y)],

[np.sum(I_x * I_y), np.sum(I_y ** 2)]])

us, vs = np.meshgrid(np.linspace(-2, 2, 20), np.linspace(-2, 2, 20))

vec = np.empty((20, 20, 1, 2))

vec[:, :, 0, 0] = us

vec[:, :, 0, 1] = vs

vec_t = np.transpose(vec, (0,1,3,2))

fig = px.imshow((vec @ M @ vec_t)[:, :, 0, 0])

fig.update_xaxes(showticklabels=False)

fig.update_yaxes(showticklabels=False)

with open("../assets/harris_corner_wssd.json", "w") as f:

f.write(fig.to_json())

patches_starts = [(300, 50), (30, 10), (130, 330)]

patch_size = 40

output = img.copy()

for y, x in patches_starts:

patch = img[y:y+patch_size, x:x+patch_size]

output = cv2.rectangle(

output,

(x, y), (x+patch_size, y+patch_size),

(127, 127, 127), 2

)

I_x = cv2.filter2D(patch, -1, np.array([[-1, 1]]))

I_y = cv2.filter2D(patch, -1, np.array([[-1], [1]]))

M = np.array([[np.sum(I_x ** 2), np.sum(I_x * I_y)],

[np.sum(I_x * I_y), np.sum(I_y ** 2)]])

eig = np.round(np.linalg.eigvals(M), 2)

R = eig[0] * eig[1] - 0.04*(eig[0] + eig[1])**2

output = cv2.putText(output, f"lmbd=({eig[0]},{eig[1]})",

(x, y-2),cv2.FONT_HERSHEY_SIMPLEX, .5, (0,0,0), 1)

output = cv2.putText(output, f"R={R}",

(x, y-12),cv2.FONT_HERSHEY_SIMPLEX, .5, (0,0,0), 1)

cv2.imwrite("../assets/harris_corner.jpg", output)