Classification Optimization

Binary Linear Classification

Let the target \(t \in \{0,1\}\) be the binary classification, using a linear function model \(z = \vec w^T \vec x\) with threshold \(I(z \geq 0)\)

where \(\vec x\) is the training data with one more dummy variable \(1\) so that the threshold is always \(0\)

Geometric Picture

Given input \(t = \text{NOT } x, x\in\{0,1\}\)

Input space

the weights (hypothesis) can be represented by half-spaces

The boundary is the decision boundary \(\{\vec x\mid \vec w^T \vec x = 0\}\)

If the training example can be perfectly separated by a linear decision rule, we say the data is linearly separable

weight space

each training example \(\vec x\) specifies a half space \(\vec w\) must lie in to be correctly classified: \(w^Tx >0\) if \(t = 1\) The region satisfying all the constraints is the feasible region. The problem is feasible is the region \(\neq \emptyset\), otw infeasible

Note that if training set is separable, we can solve \(\vec w\) using linear programming

Loss Function

0-1 Loss

Define the 0-1 Loss be

Then, the cost is

However, such loss is hard to optimize (NP-hard considering integer programming)

Note that \(\partial_{w_j} \mathcal L_{0-1} = 0\) almost everywhere (since \(\mathcal L\) is a step function w.r.t \(z\))

Surrogate loss function

If we treat the model as a linear regression model, then

However, the loss function will give large loss if the prediction is correct with high confidence.

Logistic Activation Function

Using logistic function \(\sigma(z) = (1+e^{-z})^{-1}\) to transform \(z = \vec w^T\vec x + b\).

A linear model with a logistic nonlinearity is known as log-linear

In this way, \(\sigma\) is called an activation function

However, for \(z\rightarrow \pm\infty, \sigma(z)\approx 0\) If the prediction is really wrong, you should be far from a critical point

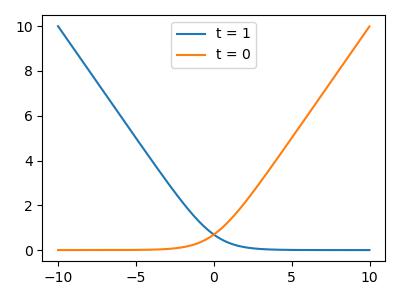

Cross-entropy loss (log loss)

More loss if the prediction is "more" confident about "wrong" answers and not punishing the correct one even not confident

where \(t\in\{0,1\}\)

Logistic Regression

\(z = \vec w^T \vec x+b\)

\(y = \sigma(z) = (1+\exp(-z))^{-1}\)

\(\mathcal L_{CE}(y,t) = -t\log(y) - (1-t)\log(1-y)\)

Gradient Descent

Initialize the weights to something reasonable and repeated adjust them in the direction of steepest descent

\(\alpha \in (0, 1]\) is the learning rate (step size)

When \(J\) converges, \(\partial_{\vec w} J = 0\) at the critical point

Under L2 Regularization

The gradient descent update to minimize the regularized cost \(\mathcal J + \lambda \mathcal R\) results in weight decay

Learning rate

In gradient descent, the learning rate \(\alpha\) is a hyper parameter. (TUT3)

Training Curves

To diagnose optimization problems, plot the training cost as a function of iteration.

However, it's very hard to tell whether an optimizer has converged.

Example: Gradient of logistic loss

Multiclass Classification (Softmax Regression)

One-hot vector/ one-of-K encoding Target

Targets from a discrete set \(\{1,...,K\}\)

For convenience, let \(t\in\mathbb R^K, t_i= \mathbb I(i=k)\) where \(k\) is the classification.

Linear predictions

\(D\) input, \(K\) output, hence we need a weight matrix \(W\)

Otherwise \(Z= Wx^*\) where \(x^*\) is \(x\) padded a column of \(1\)'s.

Softmax Function Activation

A generalization of the logistic function

The input \(z_k\) are the logits

Properties

- Outputs are positive and sum to \(1, (\sum_k y_k = 1)\) so that can be interpreted as probabilities

- If one of \(z_k\) is much larger, than \(softmax(z)_k \approx 1\)

Cross Entropy Loss

Use cross-entropy as the loss function, as from logistic regression

Log is applied element-wise

Gradient descent

Updates can be derived for each row of \(W\)

Source code

import matplotlib.pyplot as plt

import numpy as np

z = np.arange(-10, 10, 0.01)

loss = (np.exp(-z) + 1) ** -1

plt.figure(figsize=(4, 3))

plt.plot(z, loss)

plt.title("logistic function")

plt.tight_layout()

plt.savefig("../assets/classification_logistic.jpg")

plt.figure(figsize=(4, 3))

plt.plot(z, -1 * np.log(loss), label="t = 1")

plt.plot(z, -1 * np.log(1 - loss), label="t = 0")

plt.legend()

plt.tight_layout()

plt.savefig("../assets/classification_loss.jpg")