Examples: Inequality Constrained Finite Dimension Optimization

Example 1

Question

Claim Let \(\lambda_i:\mathbb R^{n(n+1)/2}\rightarrow\mathbb R\) be the function that assigns to a symmetric, \(n\times n\) matrix A its ith smallest eigenvalue for \(1\leq i\leq n\). Prove that \(\lambda_1\) is a concave function and \(\lambda_n\) is a convex function over the space of symmetric matrices.

lemma For all symmetric matrix \(A, \forall x\in\mathbb R^n. \|x\| = 1, \lambda_n(A) \geq x^TAx, \lambda_1(A) \leq x^TAx\).

proof. Since \(A\) is symmetric, by spectral theorem we decompose \(A = Q\Lambda Q^T\) where \(\Lambda = diag(\lambda_n,...,\lambda_1)\) and \(Q\) is orthogonal. Then, for any \(x\in\mathbb R\),

Note that \(y^T y = x^TQQ^Tx = x^Tx = \|x\| = 1\) so that \(\lambda_1 \leq x^TAx \leq \lambda_n\)

proof. For two arbitrary \(n\times n\) symmetric matrices \(A, B\), for some \(c\in [0, 1]\)

similarly,

By definition of concave and convex functions, \(\lambda_1\) is concave, \(\lambda_n\) is convex.

Example 2

Question

Find the regular points for

First, consider the feasible points,

Since \(y^2 = 1 - (x - 1)^2\),

Also, \(x\geq 0\), implies that \(x = 0\) or \(x \geq1\).

So that the set of the feasible points is

Then, we can simply find minimizer on \(\{(x ,y) \mid (x-1)^2 + y^2 = 1, x\geq 1\}\).

Let \(h(x, y) = (x-1)^2 + y^2 - 1, g(x ,y) = x\) so that \(\nabla h = 2((x-1), y), \nabla g = (1, 0)\). All the points on \(S\) are regular.

By Kuhn-Tucker Conditions, take \(\lambda \in\mathbb R, \mu \geq 0\)

If \(g\) is inactive, then solving

gives solution \((1 + \sqrt{1/5}, \sqrt{4/5}), \lambda = -\frac{1}{2\sqrt{1/5}}\) and \((1 - \sqrt{1/5}, -\sqrt{4/5}), \lambda = \frac{1}{2\sqrt{1/5}}\) while \((1 - \sqrt{1/5}, -\sqrt{4/5})\) is not feasible.

If \(g\) is active, from graphically observations, we consider the point \((1, -1)\), which leads to the solution \(\lambda = 0, \mu = 1\).

The candidates are \((1, -1), (1 + \sqrt{1/5}, \sqrt{4/5})\)

If \(g\) is inactive, then

is not positive semidefinite on \(S\).

If \(g\) is active, then

is positive semidefinite on \(S\).

The global minimizer is \((1, -1)\) and the minimum is \(1 - 2 = -1\)

Example 3

Question

maximize \(f(x, y) = xy\) on \(x^2 + y^2 \leq 1, x, y > 0\).

FONC to find candidates.

Note that maximize \(xy\) is equivalent to minimize \(-xy\), Since \(x, y > 0\) are inactive, let \(h(x, y) = x^2 + y^2\), note that \(h\) will be active, otherwise \(f(x, y) =-x y\) have no global minimum. Therefore, take \(\lambda\in\mathbb R\), solves

substitute \(x = \pm \sqrt{1-y^2}, \lambda = \frac{y}{2\sqrt{1-y^2}}\), then \(\sqrt{1-y^2} \pm \frac{y^2}{\sqrt{1-y^2}} = 0\), which gives four solutions \((\pm \sqrt{1/2}, \pm\sqrt{1/2})\), and the only feasible solution is \((\sqrt{1/2}, \sqrt{1/2}), \lambda = 1/2\)

SOC to check

By SOC,

Note that \(L\) is positive semidefinite everywhere as

Therefore, \((\sqrt{1/2}, \sqrt{1/2})\) is the local minimizer of \(-xy\), hence maximizer of \(xy\)

Let \(x = r\sin\theta, y = r\cos\theta\) so that the problem becomes maximize \(r^2\sin\theta\cos\theta\) on \(|r| \leq 1, \theta\in (0, \pi/2)\), which we can farther assume \(r = 1\) since \(\sin\theta\cos\theta > 0\) for \(0 < \theta<\pi/2\). Therefore, the problem becomes maximizing \(\sin\theta\cos\theta\) on \(0<\theta <\pi/2\).

Note that

\(-2\sin(2\theta) < 0\) for \(0 < \theta < \pi/2\) so that the function is concave.

Therefore, set

solve to be \(\theta = \frac{\pi}{4}\) on \(0 < \theta < \pi/2\) and is a maximizer. Therefore, \(x = \sin\frac{\pi}{4} = \sqrt{\frac12}, y = \cos\frac{\pi}4 = \sqrt{\frac12}\) is the maximizer for \(xy\)

Example 4

Question

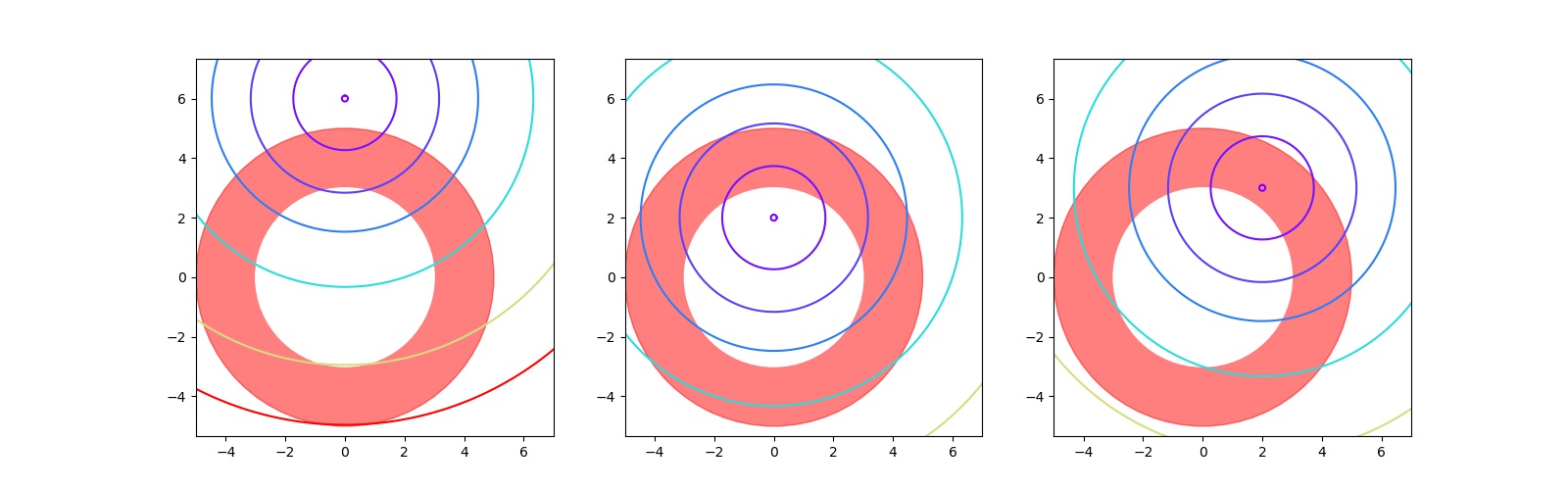

Source code

import matplotlib.pyplot as plt

import numpy as np

x = np.arange(-6, 10, 0.01)

y = np.arange(-6, 12, 0.01)

xx, yy = np.meshgrid(x, y)

c1 = plt.Circle((0, 0), 5, color="red", alpha=.5)

c2 = plt.Circle((0, 0), 3, color="white")

fig, axs = plt.subplots(1, 3, figsize=(16, 5))

axs[0].add_artist(c1);axs[0].add_artist(c2)

axs[0].contour(xx, yy, (xx) ** 2 + (yy - 6)**2,

levels=[0.01, 3, 10, 20, 40, 80, 120], cmap="rainbow")

axs[0].axis("equal");axs[0].set_xlim(-5, 7);axs[0].set_ylim(-5, 7);

c1 = plt.Circle((0, 0), 5, color="red", alpha=.5)

c2 = plt.Circle((0, 0), 3, color="white")

axs[1].add_artist(c1);axs[1].add_artist(c2)

axs[1].contour(xx, yy, (xx) ** 2 + (yy - 2)**2,

levels=[0.01, 3, 10, 20, 40, 80, 120], cmap="rainbow")

axs[1].axis("equal");axs[1].set_xlim(-5, 7);axs[1].set_ylim(-5, 7);

c1 = plt.Circle((0, 0), 5, color="red", alpha=.5)

c2 = plt.Circle((0, 0), 3, color="white")

axs[2].add_artist(c1);axs[2].add_artist(c2)

axs[2].contour(xx, yy, (xx-2) ** 2 + (yy - 3)**2,

levels=[0.01, 3, 10, 20, 40, 80, 120], cmap="rainbow")

axs[2].axis("equal");axs[2].set_xlim(-5, 7);axs[2].set_ylim(-5, 7)

fig.savefig("../assets/icfdoq.jpg")

Question

Show that every feasible point is regular

The set of feasible points is \(S = \{(x, y)\mid 9 \leq x^2 + y^2 \leq 25\}\), a.k.a. a donut. Note that \(g_1 = g_2\) so that to make sure their derivative are not linearly independent, only one of them can be active for some \(a, b\). Then, note that \(\nabla g = (2x, 2y)\neq 0\) for \((x,y)\neq 0\), therefore, on our feasible set, all points are regular.

Question

Find candidates for local minimum points using FONC.

The Kuhn-Tucker conditions gives

Case 1

Suppose both of them are inactive, then the minimizer is \((a, b), f(a, b) = 0\), and for this solution to be feasible, \((a, b)\) satisfies that \(9 \leq a^2 + b^2 \leq 25\)

Case 2

Suppose \(g_1\) is active, solves

solves to be one of

Note that \(\mu_1 \geq 0\), so the only solution is \((5\frac{a}{\sqrt{a^2+b^2}}, 5\frac{b}{\sqrt{a^2+b^2}}), \mu_1 = \frac{\sqrt{a^2 +b^2}}{5}-1\) and this only holds when \(a^2+b^2 \geq 25\)

Case 3

Suppose \(g_2\) is active, solves

solves to be

Note that \(\mu_2\geq 0\), so that \((3\frac{a}{\sqrt{a^2+b^2}}, 3\frac{b}{\sqrt{a^2+b^2}}), \mu_2 = 1- \frac{\sqrt{a^2 +b^2}}{3}\) only holds when \(a^2 + b^2 \leq 9\)

Case 4

\(g_1, g_2\) cannot be active at the same time, as discussed previously

Question

find the tangent space.

If \(g_1\) is active, for \(x_0 = \frac{5}{\sqrt{a^2+b^2}}(a, b)\), \(T_{x_0}M = \{v\in\mathbb R^2 \mid 2\times\frac{5}{\sqrt{a^2+b^2}}\begin{pmatrix}a\\b\end{pmatrix}\cdot v = 0\} = \{v\in\mathbb R^2 \mid av_1+bv_2 = 0\}\).

If \(g_2\) is active, for \(x_0 = \frac{3}{\sqrt{a^2+b^2}}(a, b)\) or \(x_0 = -\frac{3}{\sqrt{a^2+b^2}}(a, b)\), it is still a scaled vector of \((a, b)\), hence \(T_{x_0}M = \{v\in\mathbb R^2 \mid av_1 + bv_2 = 0\}\).

If \(g_1, g_2\) are both inactive, then is unconstrained and the tangent space is \(\mathbb R^2\).

Question

Check with SOC.

When \(9 < a^2 + b^2 < 25, \mu_1=\mu_2 =0\),

is positive semidefinite everywhere, hence \((a,b)\) is a local minimizer.

When \(\mu_1 = \frac{\sqrt{a^2+b^2}}{5} - 1, \mu_2 = 0, a^2+b^2 \geq 25\),

is positive semidefinite everywhere, hence \(\frac{5}{\sqrt{a^2+b^2}}(a, b)\) is a local minimizer

When \(\mu_2 = 1 - \frac{\sqrt{a^2+b^2}}{3}, \mu_1= 0, a^2 + b^2 \leq 9\),

is positive semidefinite everywhere, hence \(\frac{3}{\sqrt{a^2+b^2}}(a, b)\) is a local minimizer

When \(\mu_2 = 1 + \frac{\sqrt{a^2+b^2}}{3}, \mu_1= 0, a^2 + b^2 \leq 9\),

is negative semidefinite everywhere, hence is not a local minimum.

In summary the local minimizer is

Example 5

Question

For \(Q\) be an \(n\times n\) positive symmetric definite matrix, \(a, b\in\mathbb R^{n>0}, c \in\mathbb R^{>0}\).

minimize \(\frac{1}{2}x^TQx - b^Tx\) subject to \(a^Tx \leq c, x > 0\).

Take \(\mu_0\) and Kuhn-Tucker conditions gives equations

Because all the constraints on \(x > 0\) are inactive, they will be excluded from considerations.

Suppose unconstrained, then the minimizer for the quadratic form will be \(x^* = Q^{-1}b\), since \(Q\) is symmetric positive definite, \(Q^{-1}\) is also symmetric positive definite, also \(b > 0\) so that \(x^* > 0\), hence such minimizer can exist.

Suppose \(\mu_0 \neq 0, \mu_1,...,\mu_n = 0\), then we have

it can be solved as

Note that since \(\mu_0 > 0\), we must have \(a^TQ^{-1}b > c\).

Also, note that for any \(x\),

Since \(Q\) is positive definite everywhere, any candidate will be a minimizer.

In summary The minimizer is

Example 6

Question

minimize \(f(x) = -\sum_{i=1}^n \log(a_i+x_i)\) subject to \(x_1,...,x_n\geq 0, \sum x_i = 1\).

Part (a)

Show \(x_i = \max\{0, \frac{1}{\lambda}-a_i\}\) for some \(\lambda \in\mathbb R\).

Take \(\lambda\in\mathbb R, \mu = (\mu_1,...,\mu_n)\in\mathbb R^{n\geq 0}\), by Kuhn-Tucker conditions

for \(i = 1,...,n\)

for some \(i \in \{1,..,n\}\), if \(\mu_i\) is active, then \(x_i = 0\), \(\lambda = \frac{1}{a_i} + \mu_i\).

if \(\mu_i\) is inactive, then \(\lambda - \frac{1}{a_i + x_i} = 0\Rightarrow x_i = \frac{1}{\lambda} - a_i\),

by our constraint, \(x_i \geq 0\) so that \(x_i = \max\{0, \frac1{\lambda} - a_i\}\)

Part (b)

Show that \(\lambda\) is unique for each \(a\).

Take some \(\lambda_1\) s.t. \(\sum_{i=1}^n \max\{0, \frac{1}{\lambda_1} - a_i\} = 1\), For any \(\lambda_2 \neq \lambda_1\),

Suppose \(\frac{1}{\lambda_2} < \frac1{\lambda_1}\), then \(\max\{0, \frac{1}{\lambda_2} - a_i\} \leq \max\{0, \frac{1}{\lambda_1} - a_i\}\). Also, to make \(\sum_{i=1}^n \max\{0, \frac{1}{\lambda_1} - a_i\} = 1\), there exists some \(j \in \{1,...,n\}, \frac{1}{\lambda_1} - a_j > 0\), so that \(\max\{0, \frac{1}{\lambda_2} - a_j\} < \frac{1}{\lambda_1} - a_j\), therefore

Suppose \(\frac{1}{\lambda_2} > \frac1{\lambda_1}\), then \(\max\{0, \frac{1}{\lambda_2} - a_i\} \geq \max\{0, \frac{1}{\lambda_1} - a_i\}\), similarly pick \(j\) s.t. \(\max\{0, \frac{1}{\lambda_2} - a_i\} > \frac1{\lambda_1} - a_i\) so that

Therefore, I have shown that any other \(\lambda_2\) will not satisfy the constraint, hence such \(\lambda\) is unique.

Example 7

Question

Consider \(f(a, b) = \min_{(x,x^2)\in\mathbb R^2}|(x-a, y-b)|^2\). Write the FOC for a minimizer \((x_0, y_0)\).

For each given \((a, b)\), define

so that \(\min_{x\in\mathbb R}F(x) = \min_{(x,x^2)\in\mathbb R^2}|(x-a, y-b)|^2\) and the derivative of \(F\) is given as

since \(F\) is defined on \(\mathbb R\), the FOC is that

Question

Find the cubic equation \(x_0\) must satisfy.

\(x_0\) must satisfy the FOC, a.k.a.

Question

Find conditions on \(x_0\) that guarantee that \((x_0, x^2_0)\) is a local minimizer.

Using SOC, note that

is postive semidefinite iff \(12x^2 + 2 - 4b > 0\Rightarrow |x| > \sqrt{\frac{2b-1}{6}}\)

Example 8

Question

Let \(A\) be \(m\times n\) matrix and \(b \in\mathbb R^m, c\in\mathbb R^n\), consider the "primal problem".

and the "dual problem

Part (a)

Write the FONC for the primal optimal solution \(x^*\).

First, maximizing \(c^Tx\) is equivalent of minimizing \(-c^Tx\).

Let \(f(x) = -c^Tx, \nabla f(x) = -c\).

Let \(g_i(x) = A_{i\cdot}x - b_i\) for \(i=1,2,...,m\) where \(A_{i\cdot}\) is the \(i\)th row of \(A, \nabla g_i(x) = A_{i\cdot}\).

Let \(h_j(x) = -x\) for \(j = 1,2,...,n, \nabla h_j(x) = -e_j\) where \(e_j\) is the elementary vector.

Using FNOC, take \(p_{1*},..., p_{m*} \geq 0, \mu_1,...,\mu_n\geq 0\)

Let \(p_* = (p_{1*},..., p_{m*})\in\mathbb R^m, \mu = (\mu_1,...,\mu_n)\in\mathbb R^n\), the FNOC can be simply written as

Part (b)

Show that the Lagrange mutipliers \(p_*\) for the optimal primal \(x_*\) satisfy the constraints of the dual problem.

For some Lagrange multiplier \(p_*\), we have \(A^Tp_* = \mu+c\) and since \(\mu\geq 0\), \(A^Tp_* \geq c\), also \(p_*\geq 0\), which satisfy the constrained of the dual problem.

Part (c)

Use the comlementary slackness conditions for the primal problem to show that \(p_*^TAx_* = p_*^Tb\).

For some Lagrange multiplier \(p_*\), we have

taking transpose on both sides,

Part (d)

Write the FONC for the dual optimal solution \(p^*\).

Let \(f(p) = b^Tp, \nabla f(p) = 0\),

Let \(g(p) = - A^Tp + c, \nabla g(p) = -A\)

Let \(h(p) = -p, \nabla p = -I\)

Using FNOC, take \(x_*\in\mathbb R^n, v\in\mathbb R^m\), we have

Part (e)

Show that the Lagrange mutipliers \(x_*\) for the optimal dual \(p_*\) satisfy the constraints of the primal problem.

For some Lagrange multiplier \(x_*\), we have \(Ax_* = b-v\) and since \(v\geq 0\), \(Ax_* \leq b\), also \(x_*\geq 0\), which satisfy the constrained of the dual problem.

Part (f)

Use the comlementary slackness conditions for the dual problem to show that \(p_*^TAx_* = c^Tx_*\).

For some Lagrange multiplier \(x_*\), we have

Part (g)

Use the complementary slackness conditions to show that \(c^Tx_* = p_*^Tb\).

combine part(c) and part(f), we have

Example 9

Question

Consider the "continuous" optimization problem

The solution is given in the way that \(I_s = \{x\mid x(x) \leq s\}\), the volume \(|I_s| = \int_{I_s}1dx\) of \(I_s\) is an increasing function of \(s\), choose \(s_0\) s.t. \(|I_{s_0}| = 1\), the optimal solution is

In economic terms, \(h(x)\) is the density of a given resource as a function of the location \(x\) and \(c(x)\) is the cost of the resource at location \(x\). This problem is about wanting to accumulate total of 1 unit of the resource in such a way as to minimize the cost.

Explain why the solution makes sense in economic terms.

We are collecting all resources lower than some cost \(s_0\) and with this cost, we are able to collect exactly enough resources (1 unit of recourses).

Consider the discrete problem

where \(n\geq N\) and \(N\in\mathbb N\), \(c_i\)'s are all distinct.

Prove that except for possibly one \(i\), \(h_i\) is \(0\) or \(1\) for all other \(i\)'s.

Let \(f(h) = c^Th\) where \(c = (c_1,...,c_n), h = (h_1,...,h_n), 0\leq h \leq 1, \nabla f(h) = c\),

Let \(g(h) = \vec 1^Th\) where \(\vec 1\) is the \(\mathbb R^n\) vector with all entries being 1, \(\nabla g(h) = \vec 1\),

Note that \(0 \leq h \leq 1\) gives to constraints \(-h \leq 0, h\leq 1\).

By Kuhn-Tucker conditions, take \(\lambda\in\mathbb R, \mu_1\in\mathbb R^n, \mu_2\in\mathbb R^n\).

Suppose for some \(h_i, h_j, 0 < h_i < h_j < 1\) then we must have \(\mu_{1i} = \mu_{2i} = \mu_{1j} = \mu_{2j} = 0\), then note that

implies that \(c_i = c_j\), contradicts with the fact that all \(c_i\)'s are distinct.

Therefore, by contradiction, the statement is proven.